Deepgate

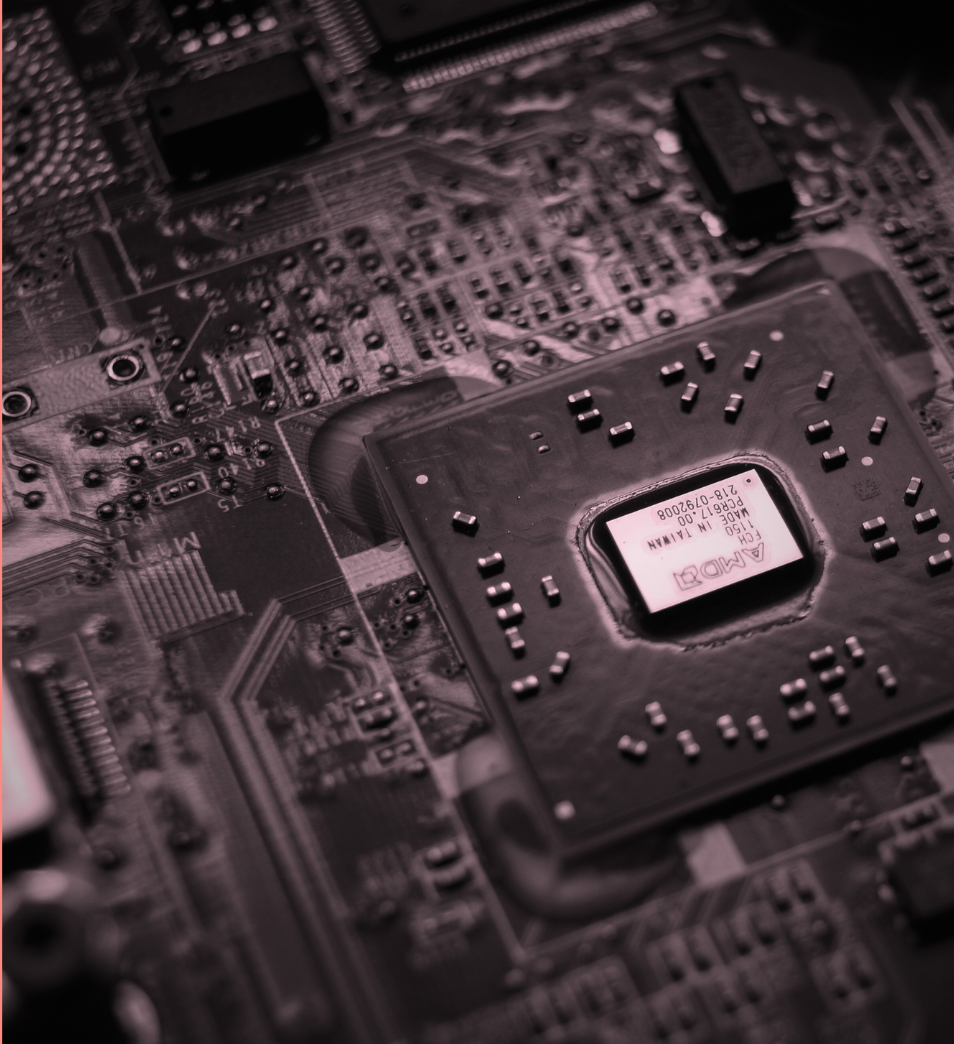

DeepGate enables AI models to run on standard CPU hardware, removing the dependency on specialised GPUs. This reduces compute costs and expands the potential for AI deployment in constrained environments. By shifting AI workloads to CPUs, DeepGate makes it possible to deploy models on edge devices such as cameras, drones, and sensors without relying on cloud infrastructure.

Summary