Search engines that think like humans

By Jamie Macfarlane | October 12, 2022

Iain Mackie leads NLP investments at Creator Fund and previously worked in Quant trading. He is finishing a PhD in neural search systems at the University of Glasgow and recently won the $500k grand prize at the Alexa TaskBot Challenge.🤖

Today we announce that Creator Fund has led a £660,000 Pre-Seed investment into Marqo. Marqo allows search engines to think like humans through neural search. The Melbourne-based company is led by two senior engineers from Amazon Robotics and Australia. 🚀

What is neural search?

Search, or information retrieval, is the study of retrieving relevant information given a user query. The most obvious example is Google, where a user inputs a query and Google will return a ranked list of web documents. Search is complex because:

- Indexes can have millions or billions of documents.

- Judgements mapping user requests can be incredibly sparse.

- Documents can be long with many different modalities (text, structured information, images, and videos).

Historically due to scale and computations, search systems were based on inverted indexes, where each word is stored in a database, making it easy to run simple algorithms over billions of documents. For example, for the query “good startup”, search algorithms such as BM25 would return results with “good” and “startup” words. However, with the explosion of large language models (LLMs) with billions of parameters (SBERT, BERT, T5, GPT3, etc.), neural search systems have vastly improved search effectiveness. Specifically, LLMs can draw semantic connections between similar terms, i.e. between “good startup” and words like “scalable”, “strong founders”, and “high growth”. In addition, there has also been breakthroughs in multimodal neural models (CLIP, DALLE, etc.) that allow for aligned multimodal indexing and search. So, for example, you could search for images or videos that align with our “good startup” query (maybe the picture of Marqo below!).

The problem is that multimodal search can be extremely beneficial in many multimodal applications (search engines, image recommendation, product search, etc.). Still, effective and robust search systems require technical experts to implement and keep up-to-date with this fast-moving search environment. Now that’s where Marqo comes in…

What is Marqo?

Marqo is developing “tensor search for humans” that improves search relevance for multimodal search applications (text 💬, image 🖼️, video 📽️), while being simple to set up and scale. Whereas many current neural databases require specialised search engineers, Marqo is a simple API where developers can index and query within seconds. Specifically, Marqo effortlessly combines the robustness and expressiveness of traditional search engines, allowing for complex filters and lexical search, with your favourite multimodal neural search models such as S-Bert and CLIP. Furthermore, Marqo’s cloud platform enables customers to easily deploy their applications with a pay-per-use and reduces costs due to pooled resources ☁️. They are already powering child-friendly search engines, NFT recommendation systems, and much much more!

More than just the technology, we are backing two incredible founders. Jesse has a PhD in Physics from La Trobe University, Postdocs at UCL and Stanford, and was a Lead Machine Learning Scientist at Alexa and Amazon Robotics AI. Tom Hamer has computer science and economics degrees from the Australian National University and Cambridge, and was a software engineer within AWS’ ElasticSearch and Database teams. Together, they have built a team of search enthusiasts with the passion and skillset to enable developers worldwide effortless access to the next generation of search.

Marqo’s open-source Github Github has reached 1.1k+ 🌟 in 6 weeks (top 10 trending libraries!). They have also launched the cloud beta that allows customers to pay-per-use and reduces costs due to pooled resources (join waiting list). Lastly, they are building a community of search enthusiasts tackling different problems (Slack).

Topical news summarisation

Now for the fun bit…

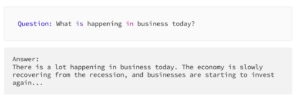

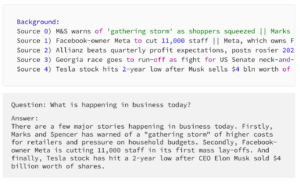

I wanted to build a fun search application within minutes to show the ease and power of Marqo. I decided to build a news summarisation application, i.e. answer questions like “What is happening in business today?” that synthesises example news corpus (link).

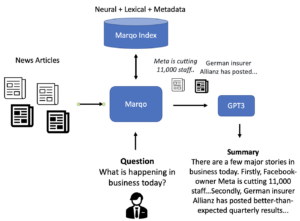

The plan is to use Marqo’s search to provide useful context for a generation algorithm; we use OpenAI’s GPT3 API (link). This is more formally called “retrieval-augmented generation” and helps with generation tasks that require specific knowledge that the model has not seen during training. For example, company-specific documents and news data that’s “in the future”. Overview of what we’re planning:

In fact, anyone following the financial markets knows ‘the “economy is slowly recovering” and “businesses are starting to invest again” is completely wrong!!

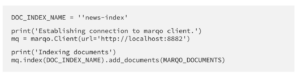

To solve this, we need to start our Marqo docker container, which creates a Python API we’ll interact with during this demo:

Next, let’s look at our example news documents corpus, which contains BBC and Reuters news content from 8th and 9th of November. We use “_id” as Marqo document identifier, the “date” the article was written, “website” indicating the web domain, “Title” for the headline, and “Description” for the article body:

We then index our news documents that manage both the lexical and neural embeddings. By default, Marqo uses SBERT from neural text embeding and has complete OpenSearch lexical and metadata functionality natively.

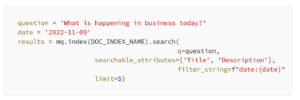

Now we have indexed our news documents, we can simply use Marqo Python search API to return relevant context for our GPT3 generation. For query “q”, we use the question and want to match news context based on the “Title” and “Description” text. We also want to filter our documents for “today”, which was ‘2022–11–09’.

Next, we insert Marqo’s search results into GPT3 prompt as context, and we try generating an answer again::

Success! You’ll notice that using Marqo to add relevant and temporally correct context means we can build a news summarisation application with ease. So instead of wrong and vague answers, we get factually-grounded summaries based on retrieved facts such as:

- Marks and Spencer has warned of a “gathering storm” of higher costs for retailers

- Facebook-owner Meta is cutting 11,000 staff

- Tesla stock has hit a 2-year low after CEO Elon Musk sold $4 billion worth of shares

Full code: here (you’ll need GPT3 API token)

Visit Marqo on Github: https://github.com/marqo-ai/marqo/tree/mainline/examples/GPT3NewsSummary